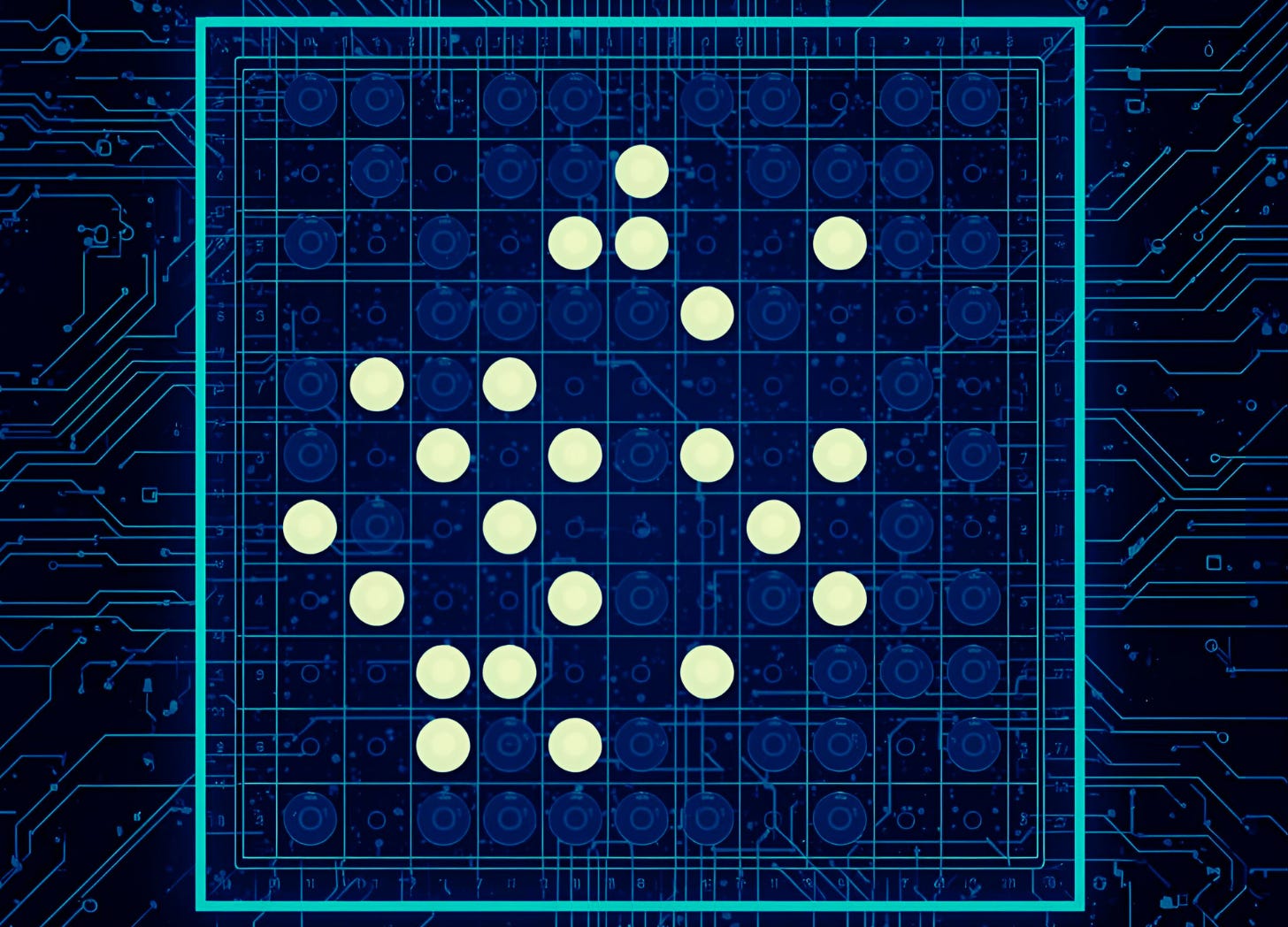

move 37

Alphago, my next move and why AI research is so important right now

Lee Sedol, the world Go champion, leaves the game midway to take a smoke break on the terrace. The cameras follow him. AlphaGo (a computer program competing with Lee) continues to play - unlike a human opponent who would have stopped and waited, because AlphaGo doesn't understand or witness this human phenomenon of needing a moment to breathe.

It's the second game of five. Lee, who started the series with utmost confidence in human creativity and intuition, feels dejected but hasn't given up yet. Then AlphaGo plays move 37 - a move no human would make, a 1 in 10,000 probability. When Lee returns and sees this move, he realises AlphaGo isn't just a statistical program. This system is creative, making almost god-like moves. He feels powerless as he loses the second and third games. No one in that room is celebrating. We're humans watching a fellow human get defeated by a machine in a game he's devoted his lifetime to mastering.

Then game four happens. AlphaGo resigns. The room cheers. Lee played move 78 - "god's move (again)," the wedge move that sent AlphaGo into what can only be described as delusion. When asked why he played this move, Lee said it was the only possible move. But it wasn't - it was also a 1 in 10,000 move as well.

This is what understanding model behaviour actually looks like. It's what happens when AI systems interact with real humans in complex, emotionally charged scenarios - not in test environments, but in the messy reality of human experience.

Reality of Model Behaviour

The conversations around AI tend to swing between two extremes: the technical researchers focused on model capabilities, and the business folks worried about replacement scenarios. But there's a third space that's barely being explored - how AI models actually behave when humans are in the loop, and what that means for both the technology and the humans interacting with it.

My background in behavioural economics keeps pulling me back to this question: we understand human psychology pretty well, and we're getting better at understanding what models can do in controlled settings, but we're terrible at understanding how they actually behave when real humans are in the loop. That's the gap I want to fill with my research endeavour.

Model behaviour isn't just what an AI system can do; it's how it actually acts when deployed. It's AlphaGo playing move 37 because it doesn't understand human emotional context, then going "delusional" when faced with Lee's equally improbable response. It's the emergent patterns, failures, and adaptations that only surface when complex human psychology meets machine learning in real-world scenarios.

We've spent enormous resources on reward training to make AI systems productive and accurate, yet we're seeing deception and hallucination emerge in unexpected ways. These aren't just technical bugs - they're behavioural patterns that reveal something fundamental about how these systems operate when they encounter the full spectrum of human complexity.

So What’s Next?

My broad exploration phase is over. I'm narrowing my research focus to model behaviour and its applied use cases. Specifically, I want to understand how AI systems influence people to make sense of information and why these effects seem to be asymmetric and enduring in ways we didn't anticipate.

Think about it this way: we wouldn't launch a consumer product without understanding user behaviour patterns. Yet we're deploying AI systems without really understanding their behavioural patterns in human contexts. That seems backwards.

I'm not interested in writing academic papers that sit on shelves. I want to work directly with founders building AI products to design experiments that reveal how humans actually behave when using their tools. Think embedded (applied) research - understanding cognitive bias in AI interaction, how people operate differently under stress when AI is involved, decision-making patterns that emerge over time.

My approach combines behavioural economics frameworks with the experiential insights I've gathered from building products that a million people have actually used. I want to help founders design their products as research instruments; gathering real behavioural data that improves both their product and our understanding of human-AI interaction patterns.

I'm also particularly interested in cases like Anthropic's Constitutional AI work, where the model's behaviour is explicitly shaped by human values, ethics and truth seeking. But I want to go deeper - what happens when these models encounter edge cases? How do they fail? And most importantly, how do humans adapt their behaviour in response?

This research will inform my work here on The Third Frontier as well as upcoming collaborations, where I'm exploring how human philosophical alignment and AI development intersect. Because ultimately, this isn't just a technical problem - it's a human one.

Why Should This Matter?

We're building all of this so humans can live better lives, but alignment to this philosophy is more fragile than we assume. What if we invest billions of dollars in AI systems, only to have humans collectively choose to abandon them because of deep discomfort with the lack of meaning at work or purpose in daily life?

My experience building products that scaled to millions of users taught me that user behaviour is rarely what you expect. The same is true for AI. But there's something deeper here that my background in both behavioural economics and consciousness studies helps me see: we're not just dealing with utility and efficiency. We're dealing with how humans find meaning when intelligence becomes abundant.

The interesting stuff happens in the messy middle ground where human psychology meets machine learning. And if we get the behavioural dynamics wrong, all the technical progress in the world won't matter.

If you think this makes sense, here is how I could use your help.

I'm specifically looking for founders who are open to embedding behavioural research into their product development process. If you're building AI tools (or products) and want to understand not just whether people use them but how they change human decision-making, cognitive patterns, and stress responses - let's design some experiments together. The best insights will come from real products with real users, not random google surveys.

If you know someone doing interesting work in this space or if you're working on something that touches these themes, I'd love to hear from you.

Over the coming months, I hope to have concrete research output and insights from my work and I will share them here on weekly basis. I'm also open to research collaborations, particularly with teams that have access to real-world AI deployment data and want to understand the human behavioural patterns emerging from these interactions.

The questions I'm asking might seem abstract, but they have real implications on ground. How we design these interactions will shape how humans and AI coexist.

That feels important enough to get right.

Thanks for following along on this journey. We are still so early. More soon.

Signing off,

Kalyani Khona

Looking forward to write ups on third frontier